Society recognizes cryptography’s important role in protecting sensitive information from unauthorized disclosure or modification. However, the correct and bug-free implementation of a cryptographic algorithm and the environment in which it executes are critical for security.

To assess the security aspects related to real hardware and software cryptographic implementations, NIST established the Cryptographic Module Validation Program (CMVP) in 1995 to validate cryptographic modules against the security requirements in FIPS 140-2. The CMVP is run jointly with the Government of Canada for the benefit of the Federal agencies in the US and Canada but the actual impact of this program is much wider: many other industry groups and local governments in US and Canada, even other countries around the world also rely on it.

Current industry and government cybersecurity recommendations point out that organizations should patch promptly, including application of patches to update cryptographic modules. Technology products are very complex and the cost of testing them fully to guarantee trouble-free use is prohibitively high. As a result, products contain vulnerabilities that hackers and the companies providing the products are competing to discover first: for the companies to fix, for the hackers to exploit. Patching products changes the game for hackers and slows down their progress. Thus, patching promptly is a way of staying ahead of security breaches. However, patching changes also the environment in which a cryptographic module runs and may also change the module itself, thus invalidating the previously validated configuration. Federal users and everybody else who depends on validated cryptography face a dilemma when frequent updates and patches are important for staying ahead of the attackers, but the existing NIST validation process doesn’t permit rapid implementation of these updates while maintaining a validated status.

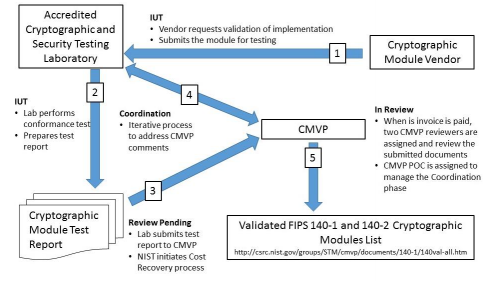

The existing CMVP leverages independent third- party testing laboratories to test commercial-off-the- shelf cryptographic modules supplied by industry vendors. The structure and process of the current CMVP are illustrated in Figure 1. Testing utilizes manual techniques and the validation relies on human-readable test reports in the form of English essays.

Figure 1: Current CMVP Process

As technology progresses and cryptography becomes ubiquitous in the information infrastructure, the number and complexity of modules to be validated increases. The plethora of cryptographic module validations has proven to outstrip available human resources for Vendors, Labs and Validators alike. When evaluation package submissions finally reach the validation queue, inconsistent and possibly incomplete evidence presentation further strains the ability for a finite number of Validators to provide timely turnaround.

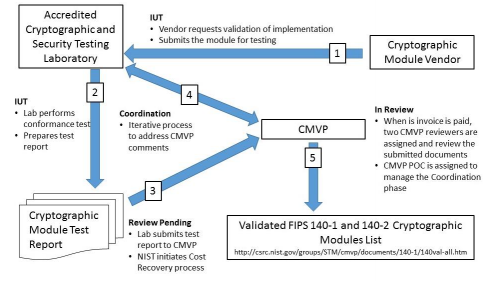

NIST has embarked on a mission to rebuild the crypto validations programs by working together with the industry and other government agencies – see Figure 2.

Figure 2: Future CMVP Process

The two-phase high-level research and development plan is below.

Phase I

I.1. Develop a JSON schema for test reporting that covers the security assertions and the derived test requirements (DTR) for FIPS 140-2, Security Requirements for Cryptographic Modules.

I.2. Develop a XCCDF schema for Security Policies for Cryptographic Modules, such that it corresponds to the specific requirements for security policies in the DTR.

I.3. Develop a protocol for cryptographic module test report and security policy submission, based on the ACVP protocol for algorithm testing – see https://github.com/usnistgov/ACVP – but adapted for cryptographic module test reporting.

I.4. Research and develop and/or adopt best-in-class robust and accurate deep learning neural networks for sentiment analysis - see progress reported in a recent research paper.

I.5. Use the test results from the companies participating in the pilots to engage in supervised machine learning (SML) and train the system to review and assess the correctness and completeness of module test results. Use the submitted security policies for SML to train the system to assess the completeness and correctness of data in the security policies. Each of the participating companies develops different technology and cryptographic modules, subject to different subsets of the FIPS 140-2 security requirements. This allows us to start with a small but diverse set of data for this stage. The difficulty is that each participant may use potentially different testing methodologies for same security requirements. The same abstract security requirements from FIPS 140-2 may be tested differently in the different technology domains.

Phase II

II.1. Research and develop word polarity models suitable for CMVP test report assessments. Collaborate with leading computational linguistics experts from academia to research and develop robust and realistic word polarity models.

II.2. Expand the training data set with synthetic data created from old real test reports and security policies submitted to the CMVP over the last several years.

II.3. Use the synthetic data set to perform SML on the expanded data.

II.4. Expand the capabilities of the system by applying it to test data from types of technologies not covered by the synthetic set.

II.5. Deploy the trained system to the automated module validation program.

Phase I establishes the infrastructure needed for conducting a large scale SML for the automated FIPS 140-2 validation program. Phase II builds on the fundamentals from Phase I and extends to capabilities of SML to handle to full range of incoming test reports.

An unofficial archive of your favorite United States government website

An unofficial archive of your favorite United States government website