Explainable Artificial Intelligence and Autonomous Systems

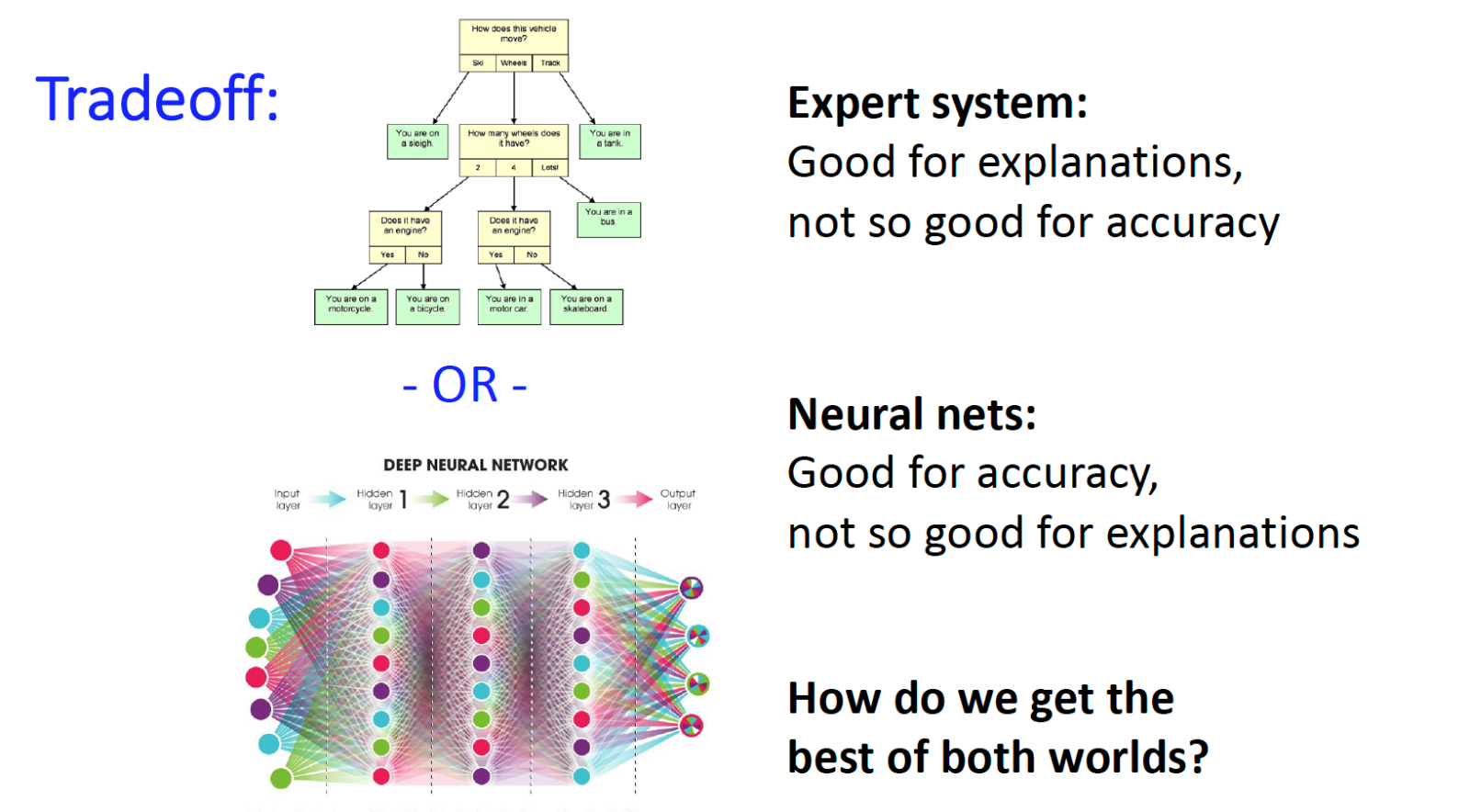

Artificial intelligence and machine learning (AI/ML) systems typically can equal or surpass human performance in applications ranging from medical systems to self-driving cars, and defense. But ultimately a human must take responsibility, so it is essential to be able to justify the AI system's action or decision. What combinations of factors support the decision? Why was another action not taken?

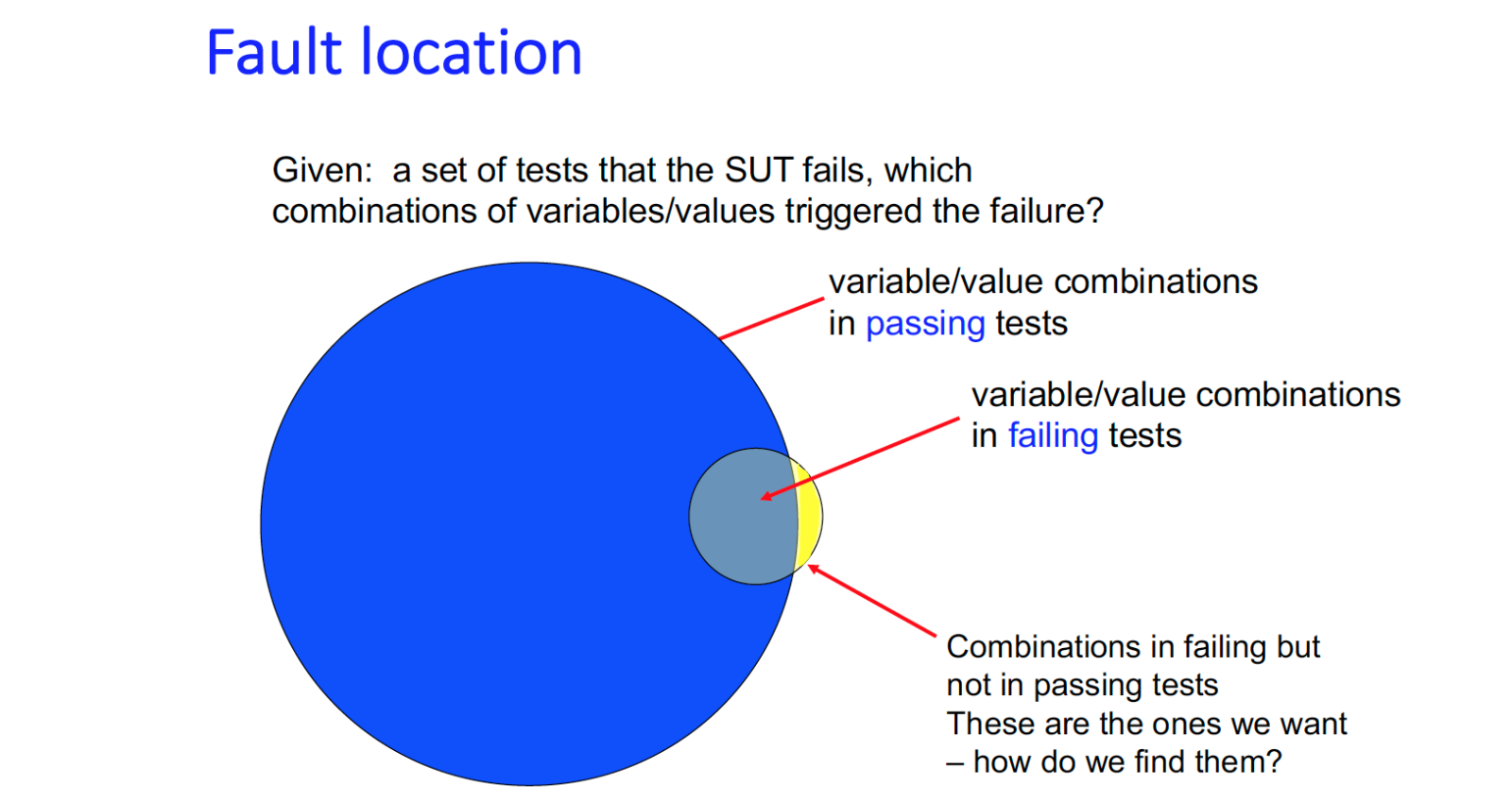

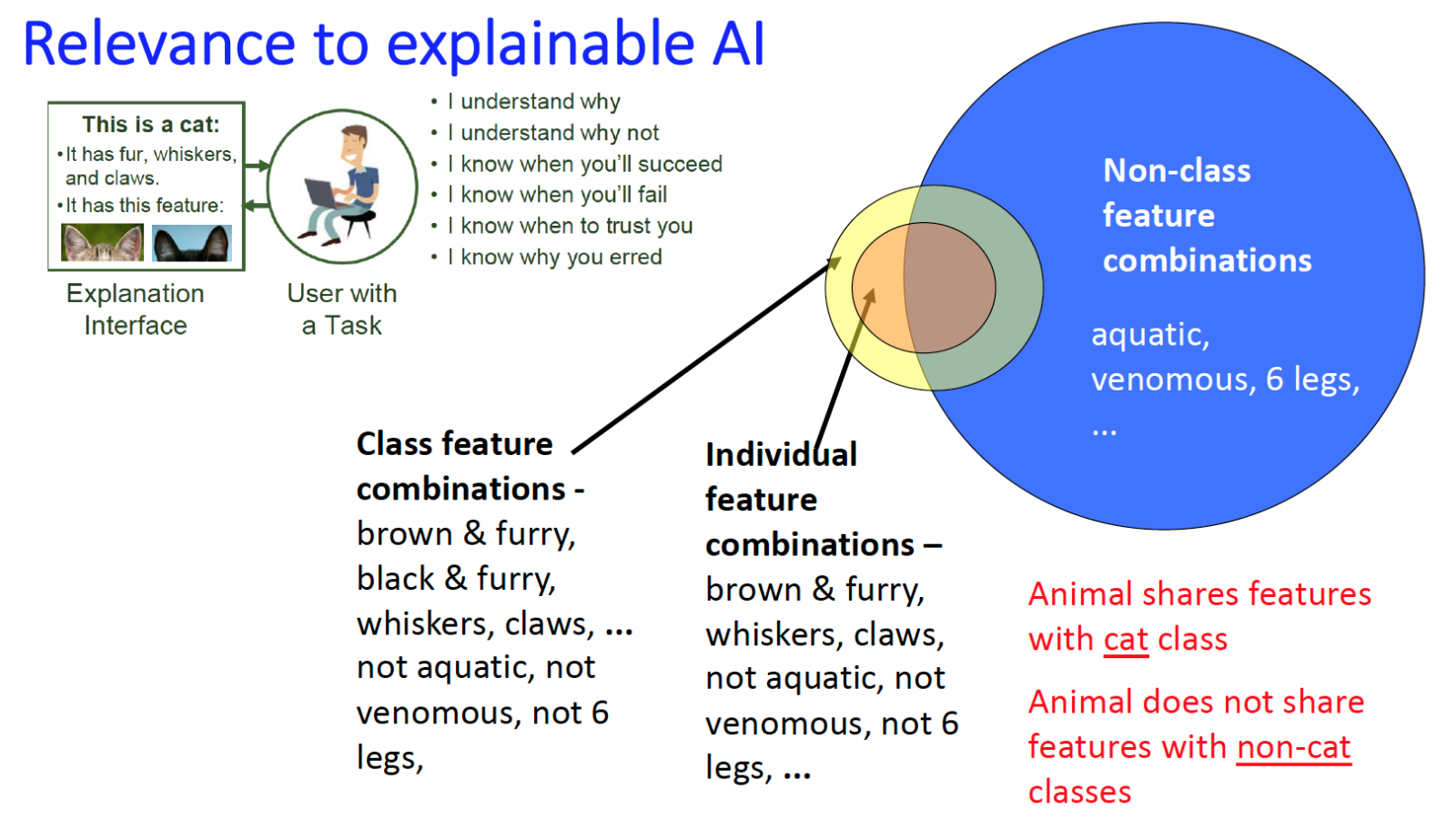

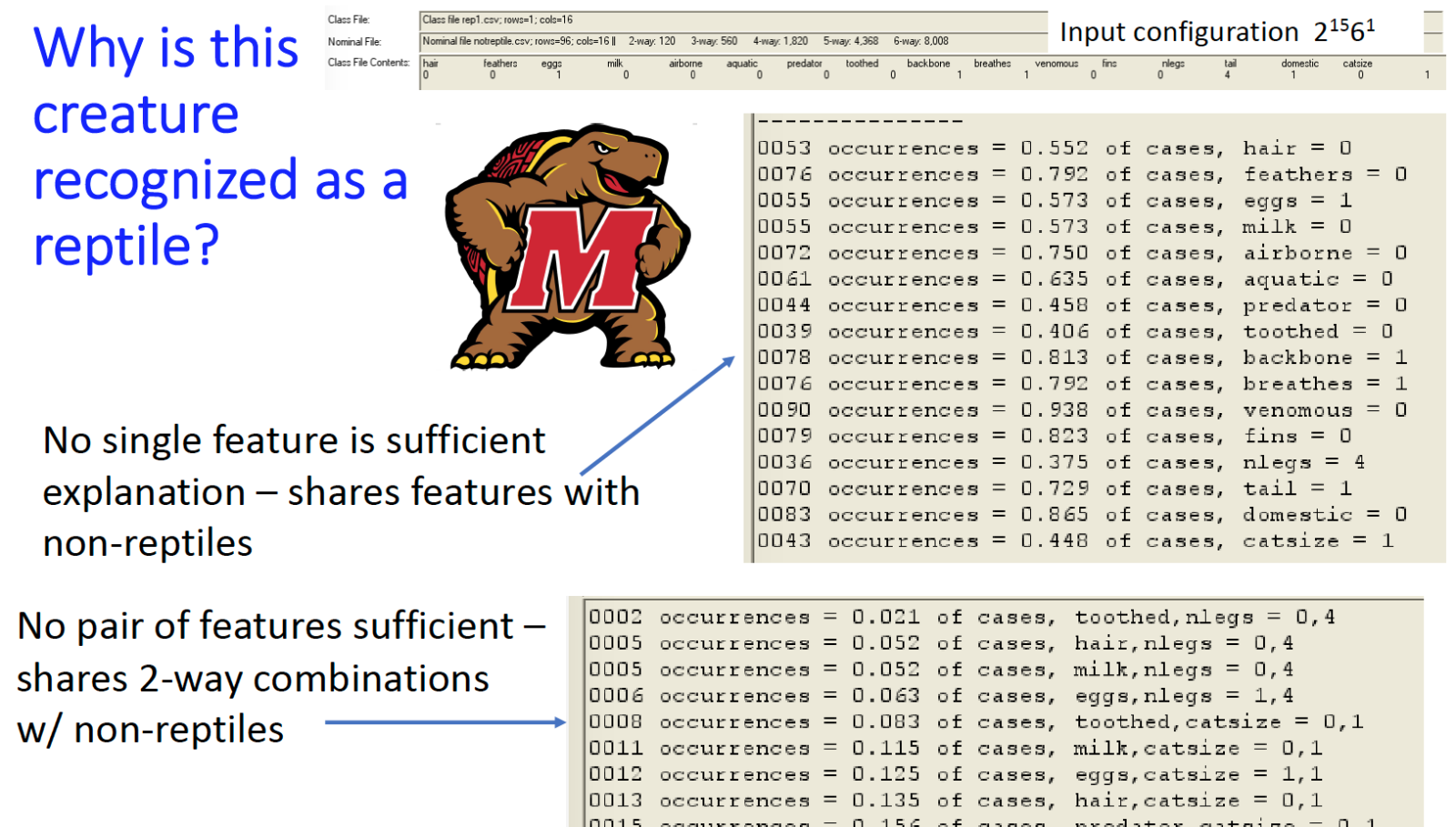

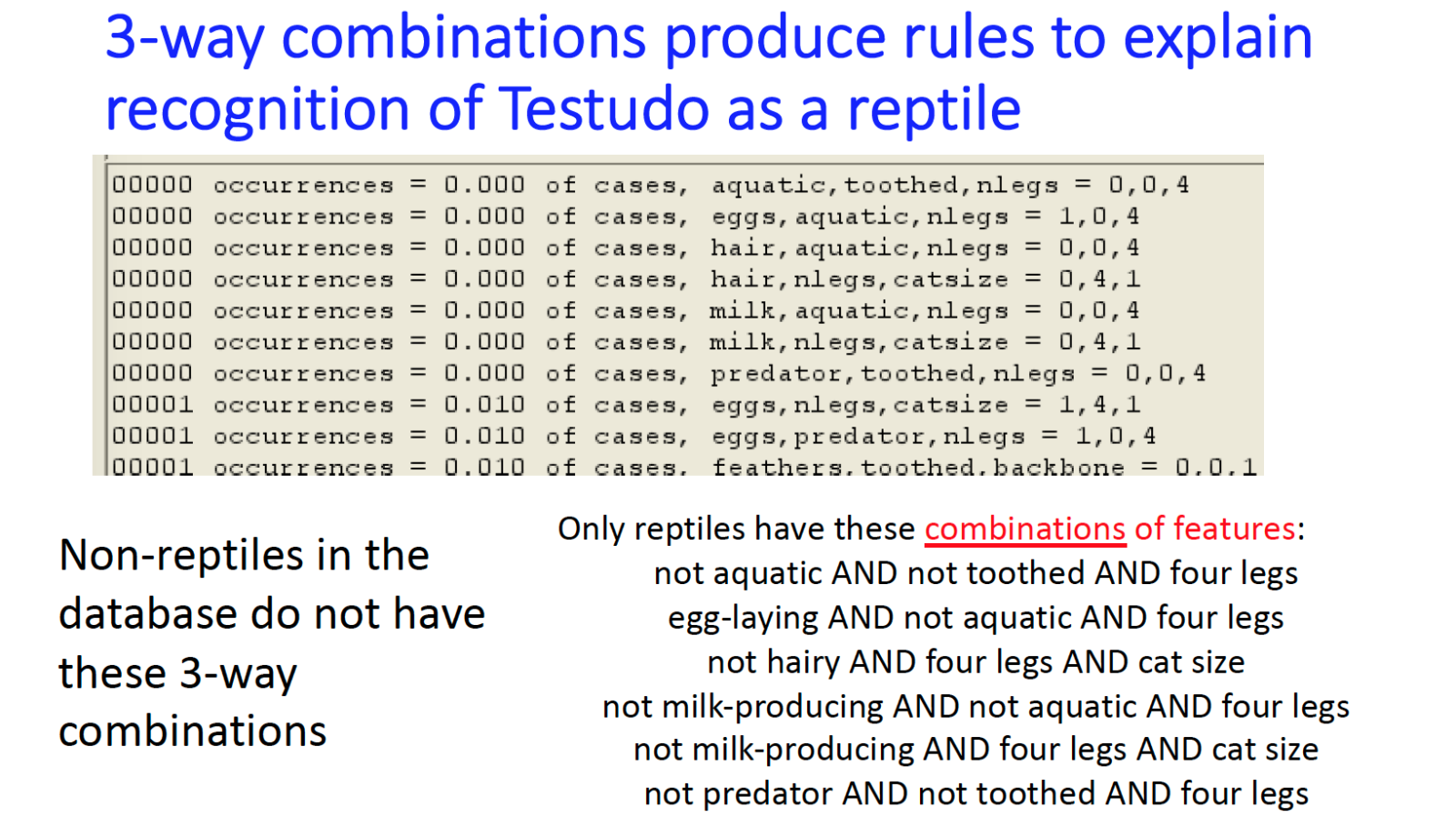

Combinatorial methods make possible an approach to producing explanations or justifications of decisions in AI/ML systems. This approach is particularly useful in classification problems, where the goal is to determine an object’s membership in a set based on its characteristics. These problems are fundamental in AI because classification decisions are used for determining higher-level goals or actions.

We use a conceptually simple scheme to make it easy to justify classification decisions: identifying combinations of features that are present in members of the identified class but absent or rare in non-members. The method has been implemented in a prototype tool called ComXAI, which we are currently applying to machine learning problems. Explainability is key in both using and assuring safety and reliability for autonomous systems and other applications of AI and machine learning.

R. Kuhn, R. Kacker, Explainable AI, NIST presentation. PDF Explainable AI PPT Explainable AI

R. Kuhn, R. Kacker, An Application of Combinatorial Methods for Explainability in Artificial Intelligence and Machine Learning. NIST Cybersecurity Whitepaper, May 22, 2019.

Related: DR Kuhn, D Yaga, R Kacker, Y Lei, V Hu, Pseudo-Exhaustive Verification of Rule Based Systems, 30th Intl Conf on Software Engineering and Knowledge Engineering, July 2018.

ComXAI - tool implementing these methods to be released in November, 2019.